I just finished playing Wingspan and while it wasn’t bad per se, it just felt mundane. But this doesn’t really seem unique to Wingspan but a more general feeling. I just don’t feel like there is much building or scaling going on. I want to go back to that feeling of playing Dominion for the first time and all the interesting interactions going on. Some of this is probably just nostalgia, a bit is a loss of novelty from understanding things better. It just seems like more recent games like Terraforming Mars, or Wingspan are neutered, balanced removing some of the zany interactions. Maybe some of this is just a lack of freedom, fewer interesting choices between cards making it hard to feel in control. Maybe the sheer number of cards makes all of them blur together. Can you name a Terraforming Mars or RFTG card? I can’t. I can name countless Dominion cards though. This is probably just a boomer rant but I need to go back and play some more Dominion I think. What games should I play to bring out the feeling of experiencing interesting and powerful interactions?

Vibes, Post Rationalism, and Burnout

In a discussion at a group meetup I defined post rationalism as “rationalism but constrained by vibes”. What I’m trying to get at is that it even if you have a somewhat coherent model of “what to do” (system 1) it isn’t always easy cajoling one’s internal machinery (system 2) to do it. For me this disconnect seems to have lead to burnout.

It seems like the longer these systems live in conflict the harder it is to get oneself to do stuff that system one suggest one wants to do. Yeah I *should* eat better, do more exercise, write that blog post, check the mail etc (system 1) but I don’t want (system 2). The consequences/stakes of “not doing X” needs to be stronger and stronger to induce a response and eventually seems meaningless. Before long summoning the “spoons/dopamine” to get up and make a microwavable meal seems out of reach.

There are a decent number of writings on this that seems to address this (Replacing Guilt series, or Meaningness) but somehow they just don’t really seem to do it for me but might be worth a try if you feel this way.

I’m not really sure what my approach is. I guess to start I’ve become more ok with just failing and not pushing myself. If I’m not feeling the best then I’ll just not force myself to go out. I stopped going to EA meetups for around half a year and have made an effort to engage less. I’m not sure this is the best approach as it seems to just build a bunch of fake barriers to doing this. “Well I don’t feel completely perfect so I’m just not going to try [low effort thing]” isn’t the best thing. This sometimes makes me feel like I’m in some sort of vetocracy hell where before I can do a thing I have to make “environmental impact assessments for the 10 different ways I can overextend”. This seems to raise the already hard bar to clear to do stuff higher.

My desires seem to shift from “do rewarding stuff” to “rewarding stuff is hard so minimize suffering”. I feel the need to avoid any type of potential psychic pain even if it’s unlikely or offset by minimizing another pain or is something rewarding. This has a very deontological feel to it. I feel like one of those negative utilitarians people who are like “well it could result in great things but it’s going to require [making 5 awkward phone calls] so meh”.

I don’t know what the answer is here. Acceptance and Commitment Therapy strategy seems the closest to reasonable. That being committing to and doing things that stretch one’s boundaries without trigging trauma/burnout and slowly expanding what one can do. Problems with this: boundaries are fuzzy, commitment and action can be temporally separate (leading to flaking or excessive load), this is going to take too long and I’m likely to flake before it succeeds.

I’m going to end the post here before it gets too long and disordered and save that for another post.

Winners Curse vs Bandit Algorithm

When evaluating options there is usually uncertainty in our estimates of outcomes. One way these manifest is the Winner’s curse. How does this work?

Well imagine we do the obvious thing. We do research on tens or hundreds of charitable interventions, estimate the expected value for each, and then pick the intervention with the largest one. What could go wrong? A nasty combination of selection and uncertainty. By picking the best charity we are likely to pick a charity that is highly overestimated in its effectiveness. That’s fine right, we just temper our expectations? Well not quite. Our interventions are likely to have significantly different levels of uncertainty. As a result we are likely to pick a highly uncertain estimate which in turn is likely to be highly overestimated.

Consider a world with 100 interventions that are identically good. We are able to estimate 50 of them with great accuracy and 50 with very limited accuracy. If we pick the largest of these estimates, we are almost certain to pick one of the highly uncertain ones. Now consider if the less accurate estimates were a bit less effective, we’d still be very likely to pick one of them. This should illustrate how selection effects similar to the winner’s curse push us to penalize highly uncertain estimates.

Now to an illustration of where we might look favorable towards uncertain estimates, the more incremental world of bandit algorithms. A bandit is a lever we can pull and gives us some reward. Often we are considering a multi-armed bandit with multiple options. We have multiple levers to pull, which will give each us an uncertain reward from some distribution. We need to balance exploiting the lever we thing is the best against exploring the other less certain levers. Here, we are making a series of donations or interventions over time. Now in contrast to the single donation case, we want to look at under investigated interventions that may have a worse expected value with the expectation that after more trials we may find it to have a higher expected value than our current best intervention. Here we will go for a uncertain intervention even if it has a worse expected value to get more information while before we would be cautious to use a highly uncertain intervention even if it has a better expected value. Bandit algorithm effects push us to seek out highly uncertain estimates.

Now how do we reconcile these? Well uncertainty comes from different places. Some or much of this can be mitigated by trial. A highly uncertain anti-poverty measure will likely be more clear after enough RCTs and funding. In this case I think we can largely lean towards a bandit oriented view. There are some issues, the world is changing so some interventions may change in effectiveness over time and the distribution is certainly not fixed but largely when we do more of an intervention we usually get more information about effectiveness.

I think my great fear about long termism and less certain interventions is that largely we don’t get as much information about effectiveness over time. Maybe this is just a feeling though, as we do more research into AI and existential risk we will reduce the uncertainty but I’m pessimistic that we will reach a wall and no be able to reduce the uncertainty further.

Trump silencing was good

Surprised that I even needed to write that title but yes Trump being removed from Twitter, Facebook, and basically the entire web was Actually Good. In my followers I’m seeing a huge amount of nitpicking and contrarianism around this.

Q: Censoring Trump will push his followers to alternative media.

A: Yes, the right will look at moving to alternative media like Parler. They’ve already been doing this. I’m skeptical that this will be a big development as we’ve seen consistently that creating an social media clone but with different moderation (Parler still has moderation) just isn’t a viable business model. The notion that social media should tolerate misinformation from the right so they don’t make their own echo chamber that will be worse is “we’ll impeach Trump when he does something truly horrible” logic. Social media frankly requires a wider appeal than a news empire like Fox News.

Q: But this is cancel culture! This will be used to silence someone/something you care about!

A: This level of coordination is quite rare among the various tech platforms. These are for-profit companies! Kicking people off means less revenue and is generally bad. There is some ideological diversity here so it’s pretty hard to get completely removed.

Q: But this happens all the time with drugs and sex workers!

A: I think this is quite different in nature. Drugs and sex work are made illegal by most governments. This bubbles up through payment processors and advertisers limiting access to those industries due to conservatism and legal risk. This is bad and here tech/private industry is cooperating and extending the reach of government. Silencing the President of the United States is directly opposing the government.

Q: But why did they wait so long? Trump et al have been doing this for 4 years.

A: The recent action seems to be a combination of:

- Trump is about to leave so not enough time to punish any companies for their actions.

- Democrats took power so doing things to gain Democratic regulatory favor is desirable

- An event of significance took place that they could point to and justify their actions.

The fact it took this long suggests that tech censoring a standing leader like this isn’t going to be a common occurrence.

Q: Doesn’t this mark the start of neo-feudalism? Big tech superseding government?

A: I guess but I’m skeptical this is the biggest development we should draw from this. Tech isn’t a single block and even when they do largely unify (like against GDPR) they don’t always win against government. If you are worried about big tech unifying against opposition from economic incentives to censor the outgoing president of the United States, shouldn’t you be paying attention to the more friendly incentives to do so to small startups threatening their profits?

Lazard Capital Cost Errors

A reminder to never doubt the chance for a spreadsheet error.

Lazard group puts out some of the most respected estimates of electricty costs. There latest estimates (found here) as well as previous estimates I’ve looked at appear to have an error in their capital cost calculations for natural gas.

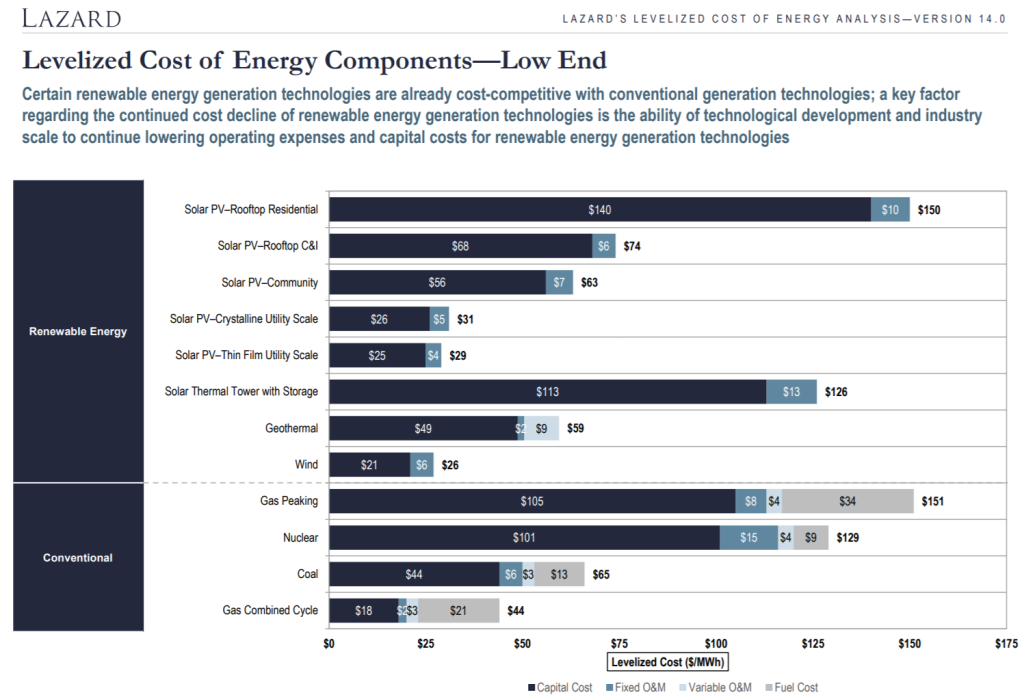

To start with, here we have an estimate out the costs broken out by source.

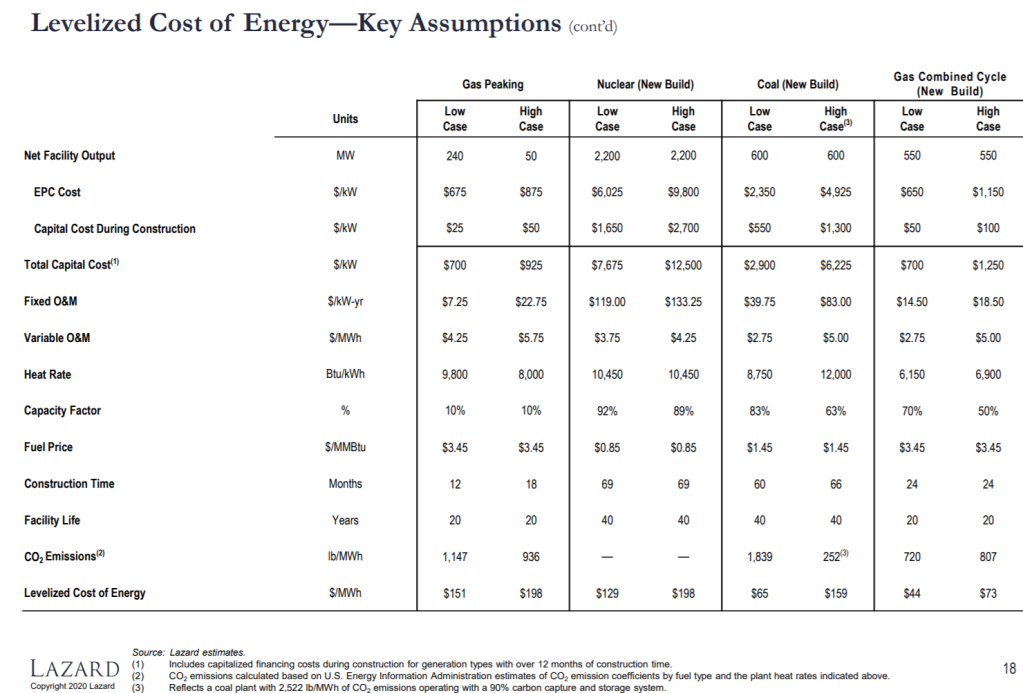

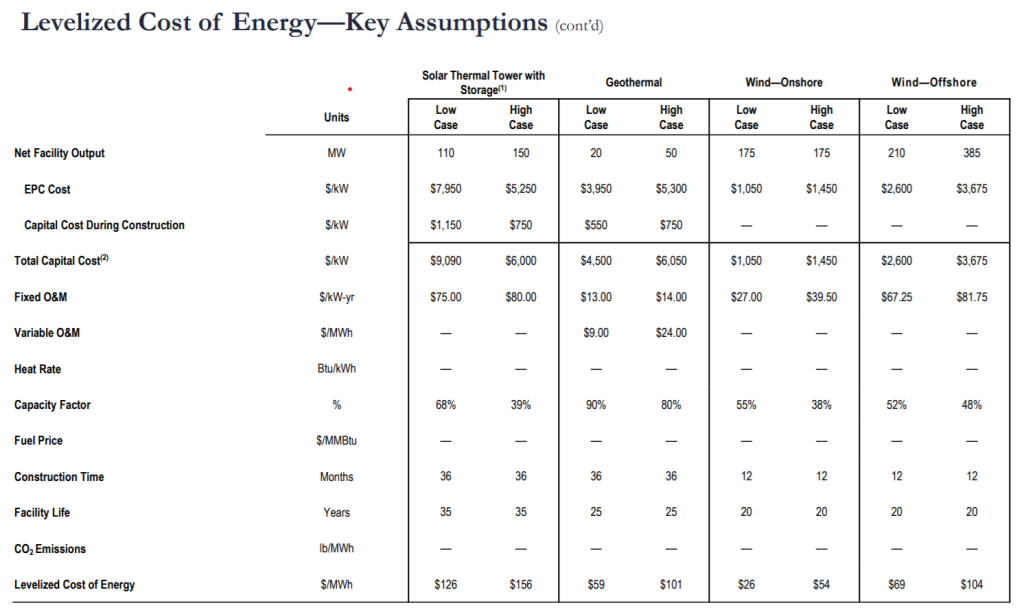

Now lets look at the assumptions pages:

Gas Peaking Cost $700 Capacity Factor: 10%

Now something funny seems to be going on here. The capital costs are:

$1050/55%= $1909/kW for wind -> $21 per kWh

$700/70% = $1000/kW for combined cycle -> $18 per kWh

$700/10% = $7000/kW for gas peaking -> $105 per kWh

The value for combined cycle doesn’t make sense. Absent a difference, we’d expect $/kWh to be proportional to the $/kW, suggesting the capital cost of combined cycle should be about $11/kWh instead of the $18/kWh estimate. I can’t find a difference that explains this. All 3 plants have 20 year facility lives. While combined cycle has a 12 month longer construction time, with at most a 12 percent interest rate and the slides stating that capital financing costs are included in the capital costs for projects over 12 months build time, this is insufficient to explain this difference.

This appears to be a spreadsheet error on Lazard’s part. I’m surprised this hasn’t been caught.

The Qattara Depression Project was stupid but might make sense now

Does the Qattara Depression ring any bells to anyone? It’s a large depression below sea level in Egypt that is fairly close to the Mediterranean. However, it’s more well known as part of a hare-brained scheme to use 213 nuclear weapons to connect it to the Mediterranean, creating a large salty lake and generating electricity from the water flowing from the Mediterranean.

Now, for a cost-benefit analysis. The project would generate around 330-670 MW [1]. Yeah, that’s it, not even a gigawatt, the project called to blow up 213 megaton weapons for a 700 MW power plant. A nuclear power plant like say, Three Mile Island would generate more power with a lot less complexity for about 2 billion dollars. Aside from the nukes there would be other issues. The area was still mined from WWII, using saltwater would create corrosion issues. Pumped hydro storage was also proposed, however was not nearly as important as now when we are adding additional renewable power and trying to deal with intermittency.

So now, lets evaluate what we get now in an era of climate change and cheap renewables. Lets do some value stacking.

Firstly, one funny feature is that the depression is large enough to have an effect on work sea level. The depression is about 1200 cubic km and world oceans are around 361 million sq km. So the depression would, fully stored, lower world sea level by 3-4 mm which is about 1 year of sea level rise averted. If we value global emissions at something like 2 trillion dollars in damage ($50/tonne) and maybe sea-level rise at 10 percent of that, that’s a cool 200 billion dollars.

Power-wise, we got the 700 MW which worth somewhere around $3-10 per W so $2.1-10 billion. Secondly, we got the immense storage capacity of 198 TWh (about 5 percent of US annual electricity usage). Using it as storage would negate the sea level decrease though. With that amount of storage the main challenge is needing to add a huge amount of generation to use it on a reasonable timescale, You’d need 20 GW or over 10 the actual generation capacity to use all that in a year! This might necessitate a wider channel and a huge cost there so, I’m not really sure how to value that but maybe somewhere around $1/kWh might be reasonable so $200 billion.

Another potential option to not be covered here for brevity is the project pairs very well with solar power in the area, using the depression to store the power for smoother deployment.

Now to the costs. The main cost would be the canal to connect to the Mediterrenean sea of 50-100 km. A Suez Canal expansion was completed recently in only one year at 23 m deep and 210 m wide and 35 km long for 4.5 billion dollars (surprisingly fast and cheap). Assuming this width is enough and doubling costs due to remoteness still leaves us with a reasonable cost of maybe 20 billion dollars. Given that this is the main uniquely high cost and it is much smaller than the benefits the project looks reasonable. EDIT: There are 200 m high barriers that would be expensive to pass. Edit: Taking the estimates here suggests even with optimistic estimates of canal costs we’d still only achieve something like $1000/kW which is at the fringe of feasibility.

So to recap, the project’s initial reason, power generation via evaporation, for the project is largely garbage and only worth a few billion dollars. Evaporation is actually pretty slow and precipitation is a much more leveraged way to generate power (traditional dams). However in a world concerned about sea level rise and intermittent and cheap renewable energy, the storage and potential to lower global sea level raise the project’s value by an order or two of magnitude and render it viable again.

[1] My two wikipedia linked sources estimate 12,100 km^2 and 19200 km^2 area at 60m depth and about 1.41 m/year and 1.9 m/year of evaporation.

Addresses vs Phone Numbers

When you get a new phone in nearly all cases you can transfer your phone number to your new phone. You don’t have to change your phone number anywhere. It just works!

When you move addresses you can get a really poor example of this by forwarding your mail. But that only applies for a year. And only applies to mail, not for packages. Why does the USPS and other companies not an address that behaves like a phone number? I could have a single address of maybe 12-15 digits that I could give to everyone. It’d be maintained in some sort of registry and I would be the only one who could change it. When I move I could simply change the physical address it mails to in one place. This would be a great improvement.

Lots of other things would become much easier. States/municipalities could leverage this address change for their records instead of having people bring utility bills in. Mailing could be made more robust by using error correction on the code so that misreading a single digit doesn’t impact delivery.

I can’t find any evidence of anything like this around the world which is surprising to me.

Saving 15-20 Percent More From Charitable Donations

EDIT: tax straddle rules prevent this (I think).

EDIT: this appears to be strategy 5 here.

(I’m not a tax attorney/accountant and have no imminent plans to try this. Consider this speculative)

TLDR: You can save almost an additional capital gains rate (15-20%) on your charitable donations if you donate appreciated assets or do some silly-looking financial transacting.

One of the side effects of charitable donations, at least in the US, are the tax savings. Donations are deductible from your income tax. This will lower your tax bill by the amount you donated times your marginal rate. (This assumes that you are itemizing and not taking the standard deduction). So if you are in the say 25% tax bracket then you would get 25 cents back for every dollar donated so 25 percent efficiency. This is great but what if you could do better?

One way to do better is to donate highly appreciate capital assets. Donating assets is not only deductible in the same way donating cash is by deducting the worth of the assets donated as if they were cash but also avoids any capital gains tax on the assets. So if you bought a stock at 1 dollar and donated it when it was worth 10 dollars then you could not not only save (25% income tax rate) *$10 =$2.50 but also would save (15%) * $9= $1.35 in capital gains tax as well netting a saving 38.5%.

Not bad, eh? But what if you don’t have any highly appreciated capital assets? Is there a way you could manufacture highly appreciated capital assets?

If prediction markets contracts were considered to be capital assets (I believe they are not in the US) you could simply find an event to bet on with every outcome having low probability and bet on every outcome. For simplicity there might be 9 candidates for Democratic Presidential nominee and one “other candidate, the highest contract might be valued at 2 dollars for a 10 dollar payout. By buying every contract for a total of $10.50 you have guaranteed that when the market resolves you’ll end up with one contract worth 10 dollars and the rest worth nothing.

Great, you’ve just managed to guarantee a loss of 50 cents, but you’ve also put all that $10.50 into an appreciated asset that you can now donate to charity and avoid the capital gains tax and accrued bunch of capital losses that you can deduct. So for 10.50 you are able to donate 10 dollars to charity deducting (25% income tax rate) * $10 dollars = $2.50 as well as deducting at least (15% capital gains tax rate) * ($10.50-$2.00) = $1.275 meaning that it only effectively cost you $6.725 to donate 10 dollars to charity for a savings of 32.75 percent which is better than the 25 percent savings by just donating cash. In the limit with no trading losses and arbitrarily small assets you can deduct the full income tax rate and capital gains rate (so 40% in our examples).

Are there financial assets in the real work that might work for this? I’m not so sure. Binary range or tunnel options sound like what you’d want but they don’t seem to be structured they way you’d want in that each option includes the others instead of excluding it making this type of strategy impossible. Alternatively, if one was risk neutral with their donations, then one could construct highly appreciated assets by simply invest in a highly risky asset and get the same effect if one was risk averse.

Why have I not heard of this before? It is a pretty unusual situation and requires some odd financial techniques (it allows and allows for arbitrage). Maybe charitable donors don’t usually concoct unusual tax schemes.

EDIT: This appears to be essentially identical to strategy 5 here. A few addenda: it looks like you will need to hold your options for at least one year for them to become long-term capital gains per here. “Property is capital gain property if you would have recognized long-term capital gain had you sold it at fair market value on the date of the contribution.”

EDIT 2: Making highly correlated bets to accumulate losses like this is what tax straddle rules are designed to handle so it is hard to do this in a risk free manner.

Talebian Empiricism is a cautionary not constructive tale

There is a dichotomy worth pointing out between predictions based on complex models (most science) and on those based on simple probability (represented by N. Taleb). For events with lots of data we can easily sync these two up and there is little conflict. For very rare “black swan” events the models cannot be calibrated because the event has either never happened or happened very infrequently.

The Talebian view that we should be skeptical of models because they can’t predict very rare events is correct but not very helpful. What do we do? Give up trying to predict the world because our models might be wrong? No we try to refine our models to better represent the world through counterfactuals and new data, and always understand that our models could be catastrophically wrong and we look for ways to mitigate the impact if our model is wrong.

Should we be a bit skeptical that our metrics/models are covering what we care about and that the correlations we’ve observed will hold in extreme scenarios? Absolutely, and we should point out all the ways they can be wrong or too aggressive and all the trends we might be missing. But we certainly shouldn’t stick our heads in the stand and say that these trends are unknowable. Does Taleb really want to live in a world where certifying a plane requires get tens or hundreds of planes being run them around the clock for a decade in order to be sure that a plane is as safe as another plane?

Complex State and Asymmetry in Games

What makes a game interesting to watch? What defines excitement? I tend to agree with the folks at fivethirtyeight that one thing that makes a game interesting to watch is a huge amount of variance in the predicted outcome throughout the game. This though misses an element that can make games very interesting to watch: a difficult to determine win probability.In most sports, most of the time it is pretty clear who is winning and who is losing and whether their changes improved because of a play or got worse because of them. IMO some of the most interesting situations are when it is unclear who is better off or has a better change of winning.

I follow quite a bit of racing and racing can have situations of this nature. While to most two cars racing many seconds apart may not seem terribly exciting compared to two cars closely following one another, to me and the metric of win probability uncertainty it can oftentimes be more exciting. One thing that I find uninteresting that many find quite interesting are long oval races. These races involve countless cars racing very closely together so it should be quite exciting. However, ultimately the results of the initial 80 percent of the race are usually unimportant because positions are so easily gained and lost that they are nearly meaningless. In racing, when watching two cars executing the same strategy close together, it is fairly easy to tell who is ahead. In Formula 1, it is oftentimes especially not interesting as it is fairly rare to see passing between two equally matched cars on the same strategy. When two cars are running completely separate strategies it can oftentimes be very interesting and stimulating to try and figure out which car is ahead.

This element of refining a model of who is winning is interesting to me. Esports and games of strategy have this element much more than normal sports. This is mostly due to the inherent asymmetry in most esports. In, Starcraft because races differ it can be difficult do directly compare how two players are doing. In DOTA, because one team may be better equipped for the late game it may be unclear if the other team is far enough ahead to finish the game. Or the two teams may be trying to win the game in entirely different ways and it is difficult to figure out how this will interact.Will one team be able to spread out and poke the other team to death or will the other team be able to group up and brute force a win.

The most interesting games are games when either an entirely new strategy is unveiled resulting in a update in how the game can be played or alternatively when the game goes so far outside the realm of normal that it is extremely hard to know who is ahead. In chess the most beautiful games are oftentimes where our normal heuristics for who is ahead are wrong. Games when many pieces are sacrificed to make way for a final checkmate.

I’d like to add a little bit about stories here. I generally am in favor of hard sci-fi but sometimes it is too limiting. What I really want is a clearly defined world where I can speculate about what comes next with certainty that the conundrum won’t be solved by some plot device. My ideal that demonstrates this is something like Twitch Plays Pokemon. The world is clearly defined, we know how everything works and has some interesting behavior and strategy relative to reality.

So this post is kinda tied into board game design. This really just describes the difficulty of getting the “snowballiness” of a game right. Make it to easy to convert an early advantage into a win is frustrating for those who are behind. Making it too easy to come back just makes the early game meaningless. The appropriate way seems to be to make the final outcome inherently complicated to determine so it is always unclear exactly who is winning. This though will violate some other principles describe here (under construction).